Introduction: The Hidden Cost of Inventory Errors

In the fast-moving tire distribution industry, every detail counts. Tire distributors manage thousands of SKUs, multiple brands, and a wide range of sizes, models, and production dates all while ensuring that the right tire reaches the right retailer or service center on time. Despite advancements in warehouse technology, inventory errors remain one of the most persistent and costly problems in the industry.

Misidentified tires, mismatched records, and misplaced inventory can cost distributors millions annually in lost revenue, inefficiency, and customer dissatisfaction. Traditional methods such as barcode labels and manual entry simply can’t keep up with the complexity and scale of modern tire logistics.

This is where AI tire sidewall scanning becomes a game changer. By using intelligent image recognition technology, tire distributors can automate the identification process, streamline warehouse operations, and reduce inventory errors by up to 96%.

The Limitations of Traditional Inventory Tracking

Most tire distributors have relied on conventional methods for decades barcode scanning, manual labeling, or RFID systems. While these approaches once represented the cutting edge of logistics, they now pose several challenges in the modern environment.

1. Label Dependence

Barcodes and stickers can easily fall off, fade, or become unreadable in warehouses where dirt, oil, and moisture are common. Once a label is missing or damaged, tracking that tire becomes a manual, error-prone task.

2. Manual Data Entry Errors

Human operators must often record tire specifications like size, brand, or DOT number manually, especially when dealing with unlabelled stock. This process is slow and subject to input mistakes, particularly in high-volume operations.

3. Time-Consuming Audits

Warehouse audits, often done manually, can take days or weeks to verify tire counts and models. Each round of checking interrupts operations and increases labor costs.

4. Limited Real-Time Visibility

Traditional tracking systems often update in batches, which means real-time accuracy is limited. This delay can cause mismatched stock reports and missed sales opportunities.

In short, outdated inventory management methods cannot provide the precision or speed today’s tire distributors need.

The Rise of AI-Driven Tire Sidewall Scanning

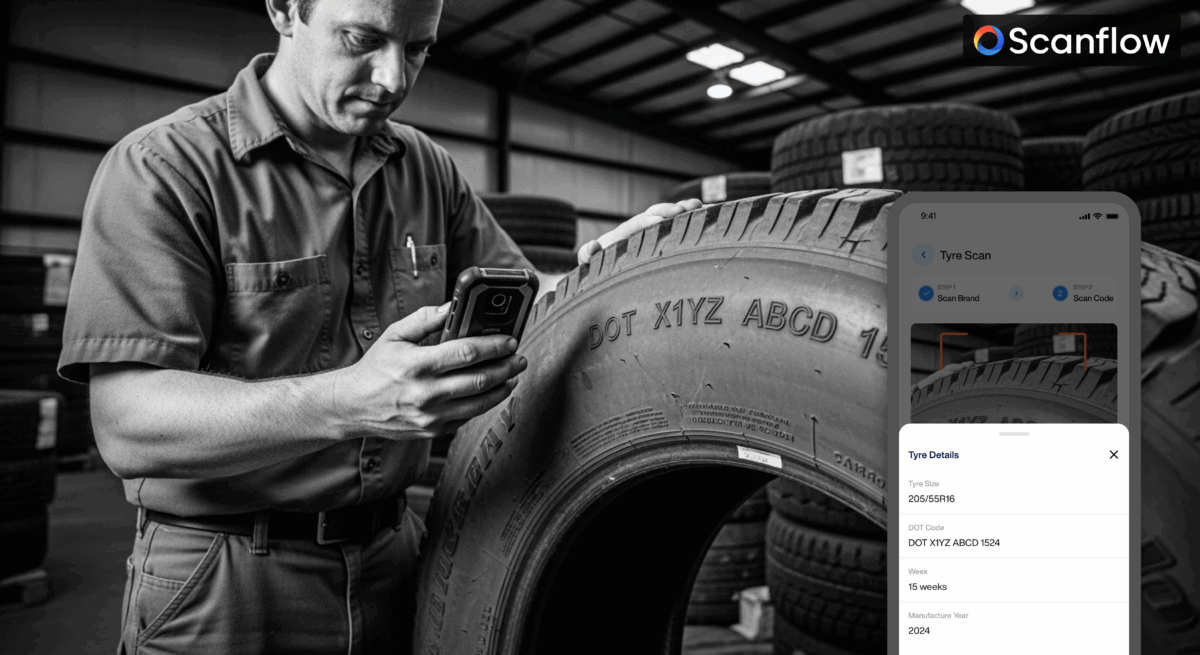

To solve these persistent issues, forward-thinking distributors are adopting AI-based tire sidewall scanners an advanced technology that leverages computer vision, pattern recognition, and machine learning to identify tires based on their unique embossed sidewall details.

Unlike barcodes or RFID tags, the AI tire sidewall scanner reads the tire itself extracting key details such as brand, model, size, and DOT code directly from the physical tire. By focusing on the natural imprints on the tire, AI scanning removes the dependency on external labeling altogether.

The result? Faster, more accurate, and fully automated tire identification.

How the Tire Sidewall Scanner Works

The tire sidewall scanner for distributors uses a high-resolution imaging system combined with AI-based pattern recognition algorithms. Here’s how it works step by step:

1. Image Capture:

As the tire passes through the scanning station or handheld unit, cameras capture multiple angles of the sidewall.

2. AI Recognition:

The system’s deep learning model identifies embossed details such as the manufacturer’s name, size (e.g., 205/55R16), load index, and DOT code.

3. Data Extraction and Verification:

Extracted information is matched against existing inventory databases to confirm the model and stock status.

4. Real-Time Syncing:

The scanner connects with warehouse management systems (WMS) or ERP software to automatically update stock counts, movement logs, and dispatch records. This automated process eliminates manual typing, barcode dependency, and the risk of human oversight all while ensuring that every tire in the warehouse is accounted for accurately and instantly.

Why Tire Distributors Are Adopting AI Scanning

1. Unmatched Accuracy and Consistency

Unlike human operators who may fatigue or overlook details, an AI tire sidewall scanner maintains consistent accuracy across thousands of scans. Even worn, dusty, or partially visible markings can be recognized with precision, ensuring reliable tracking in all warehouse conditions.

2. Significant Reduction in Inventory Errors

Studies and field data show that distributors using AI scanning experience up to 90% fewer inventory discrepancies. This improvement translates into fewer shipment delays, reduced customer complaints, and tighter control over stock valuation.

3. Improved Operational Speed

With manual methods, scanning and recording tire details might take 20–30 seconds per unit. AI scanning can process the same tire in under 3 seconds, resulting in a throughput increase of more than 10×.

4. Seamless Data Integration

AI tire identification systems are designed to integrate effortlessly with leading WMS and ERP solutions. That means all tire data from arrival to dispatch is automatically captured, stored, and synced, ensuring end-to-end visibility without manual intervention.

5. Enhanced Traceability and Compliance

For safety and regulatory compliance, tracking DOT codes is essential. AI scanning makes it easy to maintain a digital record of every tire’s lifecycle from manufacturing batch to end user. This capability supports faster recalls, audits, and customer service responses.

Real-World Applications in Tire Distribution

Inbound Inventory Management

When new stock arrives, tires are scanned automatically as they’re unloaded. The system identifies each tire’s brand, model, and size, and logs it into the warehouse system. Any mismatches between purchase orders and received goods are flagged immediately.

Storage and Shelf Organization

AI-scanned tires can be automatically sorted into appropriate zones or bins. The system suggests optimal placement for quick retrieval, improving space utilization and reducing search time for pickers.

Order Fulfillment and Shipping

Before shipping, every tire is re-scanned to confirm the correct model and size for each order. This double-verification process nearly eliminates shipping errors and ensures customers receive the right products.

Returns and Reverse Logistics

When returned tires come back, the scanner verifies their details instantly, confirming whether the product matches original sale data. This simplifies warranty claims and return processing.

Quantifying the Impact: From Errors to Efficiency

Let’s look at the measurable benefits achieved by distributors who have implemented AI tire scanning systems like Scanflow’s Tire Sidewall Scanner:

- 90% Reduction in Inventory Errors: Automatic identification ensures each tire is logged correctly, minimizing loss and miscounts.

- 50% Increase in Warehouse Throughput: Faster scanning reduces handling time per tire.

- 30% Lower Labor Costs: Fewer manual checks and data entries allow staff to focus on high-value tasks.

- 100% Real-Time Visibility: Live synchronization with WMS gives managers a clear picture of stock at all times.

- Improved Customer Satisfaction: Accurate orders and faster deliveries enhance distributor reputation and repeat business.

These numbers reflect not just operational efficiency but also strategic value. Accurate inventory management supports better forecasting, smoother supply chain operations, and stronger customer relationships.

Why Scanflow Leads in Tire Sidewall Scanning

As a pioneer in AI scanning solutions for industrial operations, Scanflow has designed its tire sidewall scanning system specifically for the needs of tire distributors. Unlike generic image recognition tools, Scanflow’s solution has been trained on thousands of tire models across multiple brands to ensure near-perfect recognition accuracy.

Scanflow’s Competitive Advantages

- Specialized AI Models: Built exclusively for tire identification, not general image recognition.

- Hardware Flexibility: Available as fixed stations, mobile scanners, or integrated conveyor systems.

- Plug-and-Play Integration: Connects easily with existing warehouse management platforms.

- Cloud Analytics Dashboard: Provides real-time insights into stock movement, scan statistics, and warehouse performance.

- Scalability: Suitable for both small regional distributors and large global supply networks.

With these capabilities, Scanflow enables distributors to automate their operations from the ground up without needing to replace existing systems.

The Business Case for AI Tire Scanning

The decision to invest in an AI tire sidewall scanner is not just about reducing human errors it’s about future-proofing your business.

Return on Investment (ROI)

Most distributors recover their investment within the first year of deployment through a combination of reduced losses, labor savings, and faster turnover. The improved data accuracy also helps optimize stock levels, freeing up working capital and improving cash flow.

Competitive Differentiation

In an industry where margins are tight and delivery accuracy determines client loyalty, the ability to guarantee near-perfect fulfillment is a major competitive advantage. Distributors who leverage AI scanning can promise accuracy and traceability that manual systems simply can’t match.

Sustainability Benefits

By improving inventory accuracy and reducing waste from misplaced or expired tires, AI scanning contributes to a more sustainable and environmentally responsible supply chain an increasingly important factor for global brands and partners.

Implementing AI Tire Scanning: What to Expect

Introducing AI tire scanning into an existing distribution setup is surprisingly straightforward.

- Assessment and Planning:

Scanflow’s team analyzes the distributor’s warehouse layout, tire flow, and system integration needs.

- Installation and Integration:

The tire sidewall scanner is installed at critical points — inbound, outbound, or quality control areas. Integration with existing software ensures seamless data transfer.

- Training and Onboarding:

Operators receive brief training to handle the scanning interface. Most staff can start using the system effectively within a single day.

- Performance Monitoring:

Scanflow provides ongoing support and analytics dashboards to measure performance improvements, track accuracy rates, and optimize throughput.

Looking Ahead: The Future of Tire Distribution

As AI technology continues to evolve, the potential applications for tire distributors are expanding rapidly. Future iterations of AI tire sidewall scanners will include predictive analytics, automated sorting robots, and even integration with vehicle service centers for full tire lifecycle tracking.

The goal is to create a completely connected tire ecosystem one where every tire is identifiable, traceable, and verifiable from manufacturer to end user.

In this future, distributors using AI scanning won’t just manage inventory; they’ll orchestrate intelligent supply networks that adapt, learn, and optimize themselves continuously.

Conclusion: A Smarter, More Accurate Future for Tire Distribution

Inventory errors have long been a silent drain on profitability for tire distributors. But with the advent of AI-powered tire sidewall scanners, the days of manual miscounts and mislabeled stock are coming to an end.

By adopting this innovative technology, distributors can achieve:

- Up to 90% reduction in errors

- Real-time inventory accuracy

- Streamlined warehouse operations

- Faster order fulfillment

- Greater customer trust

Scanflow’s AI scanning solutions empower tire distributors to transition from reactive management to proactive intelligence setting new standards for efficiency, reliability, and growth.